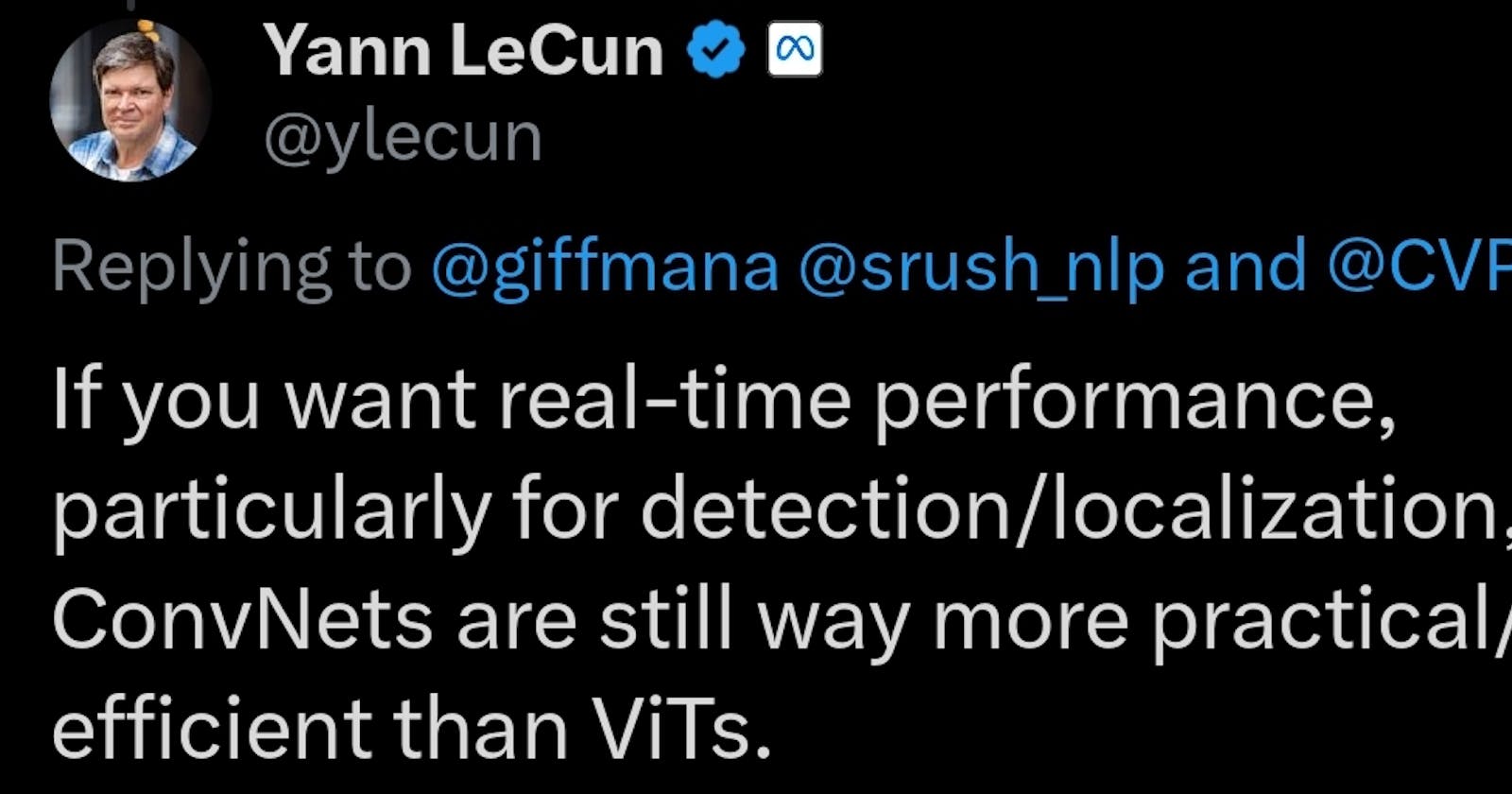

Yann LeCun's tweet is spot on. My final year project focuses on vision transformers, and I can attest that they excel in vision tasks, possibly even surpassing convolutional neural networks (convnets). However, transformers demand significant resources, relying heavily on GPUs for efficient operation, rendering them impractical for edge device deployment. Does this render them entirely useless? Not necessarily. Just as in the 90s when people doubted the feasibility of convnets on edge devices, advancements in hardware eventually made it possible, leading to the emergence of AlexNet and transforming computer vision. We may just need to await a similar breakthrough moment for transformers, when hardware becomes more affordable.